Cognition

Mind-Body Problem: How Consciousness Emerges from Matter

Subjective experience is built from physically encoded internal representations.

Posted January 20, 2023 Reviewed by Tyler Woods

Key points

- The mind is the brain’s internal model of the body and environment. It is a physically encoded web of representations—a correlational map.

- Internal representations are key to understanding how sensory perceptions of stimuli from the physical world are turned into mental phenomena.

- Representations have valence. This forms the basis for affect and emotion. Affect infuses self-representations with feeling and agency.

- The brain forms prediction models. When incoming stimuli match expectations, a state of resonance occurs, which generates conscious experience.

“Conscious experience is at once the most familiar thing in the world and the most mysterious. There is nothing we know about more directly than consciousness, but it is far from clear how to reconcile it with everything else we know. Why does it exist? What does it do? How could it possibly arise from lumpy gray matter?” -David J. Chalmers, The Conscious Mind: In Search of a Fundamental Theory (1996)

The mystery of subjective experience, also known as the "hard problem of consciousness," refers to the question of how and why we have subjective experiences at all. The subjective, first-person experiences of sensory and mental phenomena, such as the redness of a rose or the taste of a lemon, are referred to as "qualia". The ultimate quest of neuroscience is to explain how qualia are produced by the brain, and to explain how it is that our brain produces a distinct sense of self—an entity experiencing those qualia, possessing also agency, a coherent sense of stability and continuity, and a personal narrative.

Key to understanding the link between the physical and mental worlds are internal representations—also referred to as mental representations or cognitive representations. Subjective experience is built from internal representations.

Internal representations

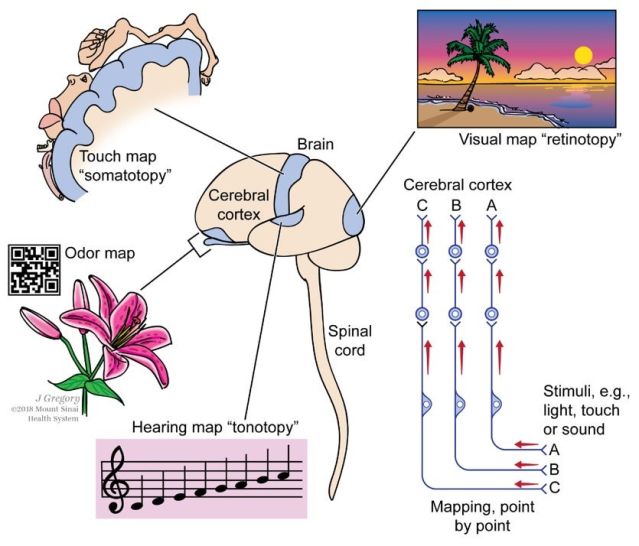

Internal representations are images or models of the body and the world. They have a relational nature to the reality they map—they are built from and characterized by direct correlations to physical things. They are the way that sensory perceptions of the physical world get turned into mental phenomena, which in turn have causal power on the physical world. Internal representations begin with an animal’s sense organs receiving physical signals from its environment. Those signals may be visual (photons), auditory (air vibrations), touch (mechanical stimuli), smell (chemicals), etc. Figure 1 illustrates in simplified form how internal representations are formed from sensory perceptions, according to a theory elaborated by psychiatrist-neurologist Todd Feinberg and evolutionary biologist Jon Mallatt.

Figure 1. Mapped isomorphic organization of the exteroceptive sensory pathways. Each sensory pathway of several neurons (right) is a hierarchy that carries signals up to the brain, keeping a point-by-point mapping (A, B, or C) of a body surface, a body structure, or the outside world. This mapping leads to the mapped mental images that are drawn around the brain. The touch map of the body (upper left) includes a cut section through the folded cerebral cortex. The bar code associated with the flower at left shows that each complex odor has its own, coded scent signature. [1]

Because of their physical correspondence with what they are mapping, the representations may be referred to as isomorphic maps, or (in the case of vision) topographic images. Signals are also received from the animal’s own body, such as its spatial positioning. Thus, the brain forms models of the world and of itself in relation to the world.[2]

The process by which sense organs transduce and transmit stimuli into neuronal signals and internal representations is entirely physical and mechanistic at the molecular level.

The ability of an animal to form internal representations is just one of the things needed for that animal to have simple consciousness, but it is a key element. [See Footnote #3 for links elaborating on this point, and for an important point about how subjectivity is built into the very nature of life].

Memory

Brains evolved the ability to store salient mental representations (through short-term and long-term changes in neuronal connections), then access that information after intervals of time (short-term and long-term memory), integrate that information with other relevant information, and update those memories as new information becomes available.

Cognition and behavior

The neuroscientist Joseph LeDoux defines cognition as the ability to form internal representations and to use these to guide behavior. Internal representations enable an animal to react to stimuli even when the stimuli are not present. For example, there may be stored representations of a cue that has previously been associated with food, danger, or sex. Those representations can then guide behavior independent of the presence of the actual stimulus. Thus, it is the mental representation that is now guiding behavior, rather than the stimulus.[4]

Mammals have a much more complex kind of cognitive representation than do simpler animals,[5] with some deliberative capacity—the ability, as LeDoux puts it, to form mental models that can be predictive of potential things that do not yet exist—this is a much more complex cognitive capacity than simply having a kind of static memory of what's there. It enables the ability to weigh, plan, and calculate behavioral options, imagining scenarios.

Notice how mental activity is not just caused by input from the physical world, but routinely acts back on it.[6]

Learning

As we've noted, internal representations are correlational maps. Most learning is based on establishing correlations between things—correspondences or associations. Behavioral conditioning occurs through rewards and consequences reinforcing or weakening those associations.

According to an elegant theory by neuroscientist Simona Ginsburg and evolutionary biologist Eva Jablonka, which I have reviewed elsewhere, learning may actually be the fundamental driver of the evolution of consciousness. As Ginsburg and Jablonka state: “The evolution of learning and the evolution of consciousness are intimately linked, even entangled. “[7] Their theory proposes that a form of associative learning/conditioning that they call "unlimited associative learning" is an evolutionary marker of simple consciousness.[8]

Value

Positive and negative reinforcement obtained in the process of learning by association confers valence or affect to experience. Thus, brains assign value to stimuli—"good"-ness or "bad"-ness. This forms the basis for the evolution of feeling and emotion, and the fuel for motivation and goal-directedness.[9]

All this, of course, occurs entirely physically and mechanistically, at the molecular level—just like all the other processes we have discussed, and just like every aspect of brain functioning that produces the “mind.”

Self-representations and self-awareness

Self-representations (the brain's mapping of the body and its spatial positioning and actions) combine with affect (feeling) to provide a sense of ownership of the body and of perception, a feeling of presence and agency—the sense of being an entity that is doing the experiencing. A feedback loop of the brain’s modeling, predicting, and controlling of the body’s internal state and actions reinforces the sense of self.

Humans’ complex sense of self-awareness probably arises from recursive forms of self-representation—the brain modeling its models of itself, in what Douglas Hofstadter referred to as a “strange loop.”[10]

The self is a very stable illusion constructed at a high level of abstraction from layers of underlying mental activity. The self has no perceptual access to the underlying neural processes from which it is built. It has no need for such access from an evolutionary point of view, so it never evolved to understand its own building blocks. All that the brain “knows” is its simplified, non-physical model of its own attention processes.

Complex, abstract representations

At a basic level, internal representations are simply sensory images (as was illustrated by figure 1). Complex brains form representations of representations. Abstract mental concepts are constructed as higher-order representations from symbolic, analogous correspondences to physical things—they are still assembled, at bottom, from the building blocks of sensory images.[11, 12]

Predictions and expectations

Predictions are key to how the brain processes information and learns. The brain is a prediction machine: As the brain learns to recognize patterns of information, so it acquires the ability to predict the form that new information will take and to form expectations about sensory input. This improves efficiency of information processing by imposing top-down predictions on partial information as it is received bottom-up from the senses. This short-cutting reduces the amount of energy and resources required to process all that information. Mental representations are central to this process of making predictions.[13]

Resonance

When inputs match expectations, a neural state of resonance occurs, which, according to one influential theory, is the conscious experience. The neuroscientist Stephen Grossberg formulated a theory of how brains learn to attend, recognize, and predict objects and events in a changing world, called Adaptive Resonance Theory (ART). Following is a very summarized explanation of this complex and sophisticated theory:

When an active expectation matches attended critical features well enough, excitatory bottom-up and top-down signals mutually reinforce each other between the features and the active recognition category that is reading out the expectation. The result is a state of resonance in which the matched signals are synchronized, amplified, and prolonged long enough for us to consciously recognize the attended object or event.[14]

Resonance is the physical instantiation of subjective awareness: “It cannot be overemphasized that these resonances are not just correlates of consciousness. Rather, they embody the subjective properties of individual conscious experiences.”[15]

The computational neuroscientists Ogi Ogas and Sai Gaddam, in describing Stephen Grossberg's ART, note:

If a mind is not capable of resonance, it is not capable of consciousness. We can go one step further: because a pair of mental representations is an essential component of every resonant state, we know that a mind that lacks representational thinking cannot be conscious.[16]

Language and culture

Humans have the ability to share their internal mental states by employing shared attention and symbolic language,[17] and to mirror these to each other, thereby building a more sophisticated sense of self.[18] From language comes the ability to develop extremely abstract ideas, with almost unlimited complexity. And from language emerges the potential for practically limitless cultural evolution and the ratcheting up of collective cumulative learning.[19] Some neuroscientists, including Ogas and Gaddam,[20] consider language essential to higher forms of consciousness.[21]

Conclusion

Internal representations are, of course, just part of the answer to the question of how consciousness emerges from physical neuronal processes, but they are key to explaining how subjective mental experiences are fundamentally physical phenomena—how consciousness emerges from matter.

References

[1] Todd E. Feinberg and Jon M. Mallatt, Consciousness Demystified (Cambridge, MA: MIT Press, 2018), Plate 1.

[2] Internal representations should not be imagined as actual pictures residing somewhere in the brain. Rather, they are diffusely encoded elements / traces of information stored across distributed neuronal networks, interconnected in highly complex ways. The elements of the composite representation are correlated with each other through the principle that neurons that fire together wire together (known as Hebb’s Law). The “imagined” composite image of the mapped external reality is reconstructed by the brain from all this intercorrelated distributed information.

[3] See my posts The Evolutionary Origins of Consciousness and How Consciousness Evolved as a Purely Physical Phenomenon for more discussion about the key role of internal representations in the evolution of simple conscious experience, beginning in primitive animals.

For a list of additional necessary ingredients required for an animal’s nervous system to be capable of producing simple consciousness, see my post Learning May Be the Key to the Evolution of Consciousness—specifically the section in that post titled “Characteristics of Minimal Consciousness—A Consensus List,” compiled by neuroscientist Simona Ginsburg and evolutionary biologist Eva Jablonka.

Feinberg and Mallatt, in their theory of the evolution of consciousness, list what they refer to as special neurobiological features, which apply to animals with primary consciousness. These include:

-- Neural complexity (a brain with many neurons and many subtypes of neurons)

-- Elaborated sensory organs (e.g. image-forming eyes)

-- Neural hierarchies with unique neural-neural interactions—including extensive reciprocal (re-entrant, recurrent) communication within and between the hierarchies for the different senses

-- Neural pathways that create mapped mental images or affective states (isomorphic representations, affective states)

-- Attention as a participant in consciousness (selective attention mechanisms)

-- Memory

-- (For elaboration, see: Todd E. Feinberg and Jon M. Mallatt, Consciousness Demystified (Cambridge, MA: MIT Press, 2018), p.68-69, 79-87).

ON THE ORIGINS OF SUBJECTIVITY:

As I stated in my post on The Evolutionary Origins of Consciousness: It’s worth noting that subjectivity is built into the very nature of life: as soon as the first cell evolved there was an inside and an outside, and therefore the beginnings of a subjective-objective divide between the body and the outside world. There is no physicalist / materialist principle that says you can’t have a divide between the interior and exterior world, with the interior being inaccessible to the exterior. There’s no need for mystery or mysticism in trying to understand this divide and why the interior of an animal’s experience is inaccessible to an objective observer.

[Click 'More' to view footnotes 4-21].

[4] Or, as Ginsburg and Jablonka (mentioned above in Footnote #3) put it: “The brain gets chemical and electrical inputs from within the body and from the outside world, processes them through interactions among different brain areas, and sends the output signals back to the body in an ongoing reciprocal ‘dialogue.’ Newly constructed representations update and evaluate previously remembered representations, motivating the individual to act or to refrain from acting.” [Simona Ginsburg, Eva Jablonka, Anna Zeligowski (Illustrator), Picturing the Mind: Consciousness through the Lens of Evolution (Cambridge, MA: The MIT Press, 2022), p 29]

[5] LeDoux provides evidence (as do Feinberg and Mallatt) that all vertebrates have brains that store internal representations, and even invertebrates have basic cognitive representational ability. LeDoux, in his book The Deep History of Ourselves: The Four-Billion-Year Story of How We Got Conscious Brains (New York City: Viking, 2019), outlines the gradual evolution of more complex internal representations in more complex brains. See also Footnote #9 discussing LeDoux’s notion of schema.

[6] Action is initiated by neural activity in the motor cortex forming a mental representation of an action plan, such as lifting your hand. That neural activity is transmitted via motor neuron signals to the muscles to execute the action. In fact, with current technologies, people can initiate and control movement devices directly by their cortical brain activity—that is, just by thinking about a desired movement. And with newer technologies, people can even learn to control synthetic speech devices through activity in the speech-producing centers of their brain.

[7] Picturing the Mind, p 35. Their theory, which I reviewed in my post Learning May Be the Key to the Evolution of Consciousness, was developed in depth in their academic tome: Simona Ginsburg and Eva Jablonka, The Evolution of the Sensitive Soul: Learning and the Origins of Consciousness (Cambridge, MA: The MIT Press, 2019).

[8] They suggest that the organizational dynamics of Unlimited Associative Learning (UAL) constitute the dynamics of minimal consciousness: “The neural dynamics that enable the functioning of complex perception and action…is minimal consciousness. It is what renders an animal sentient.” (The Evolution of the Sensitive Soul, p.350)

[9] Feinberg and Mallatt theorize that an animal that shows complex operant conditioning, in which behaviors are modified through the association of stimuli with reinforcement or punishment, possesses positive and negative feelings or affects. [Todd E. Feinberg and Jon Mallatt, “Unlocking the “Mystery” of Consciousness,” Scientific American, October 17, 2018. https://blogs.scientificamerican.com/observations/unlocking-the-mystery-of-consciousness/] They postulate that it is the combination of images (representations) and affects that produces primitive forms of consciousness / subjectivity. [Todd E. Feinberg and Jon M. Mallatt, Consciousness Demystified (Cambridge, MA: MIT Press, 2018), p. 108, and plate 10.].

In similar vein, the neuroscientist Joseph LeDoux proposes that mental models, or schema, are the non-conscious basis of emotional experiences. He defines schema as bundles of conceptually interrelated memories (and memories are simply stored information). Schemas are the building blocks of cognition. They allow new information to be conceptualized based on past experience, and to be stored and remembered more efficiently. “Emotion schema” are memories related to particular kinds of situations involving emotions. They are non-conscious bodies of knowledge about emotions that help us conceptualize situations involving challenges and opportunities. [Joseph LeDoux, “An Emotion Is...” Psychology Today, November 11, 2019; and Joseph E. LeDoux, The Deep History of Ourselves: The Four-Billion-Year Story of How We Got Conscious Brains (New York City: Viking, 2019), p. 231, p. 352]

For further discussion about the evolution of feeling (and its thoroughly mechanistic chemical basis), see my post The Brain as a Prediction Machine: The Key to the Self? including footnotes #15-23 elaborating on Antonio Damasio's theories.

[10] Douglas Hofstadter, I Am a Strange Loop (New York: Basic Books, 2007).

[11] Abstract thoughts are thus just higher-level representations built from hierarchies of representations. Each higher level representation distills the essence of the more concrete lower level representations (i.e. more abstract, metaphorical concepts incorporate the analogical elements of simpler, more concrete features. Simply put, one thing reminds us of another because of some similar feature). At the bottom of this hierarchy are the physical sense perceptions and movements, upon which all other thoughts are built. Abstract thoughts are in essence still fundamentally just "maps" corresponding to the external environment and the individual’s position in it.

[12] The neuroscientists Ogi Ogas and Sai Gaddam, in their book Journey of the Mind: How Thinking Emerged from Chaos, (New York: W. W. Norton & Company, 2022) outline a theory of the evolution of increasingly complex minds. The ability to form mental representations is one step along this “journey.” Summarizing their theory in an interview with the Next Big Idea Club, they state:

“Each pattern-thinking mind is a community of point-thinking minds. Each neuron is a self-contained point-thinking mind. It takes a whole network of interconnected neurons—of interconnected point-thinking minds—to think about patterns. In turn, to think about objects, networks of neurons had to learn to communicate with one another. Networks learned to communicate using mental representations. It takes a community of interconnected networks exchanging representations with one another to think about objects.” (See also Footnote #19)

[13] Joseph LeDoux discusses the effects of top-down predictive coding on perception “in terms of schema or mental models that guide pattern completion and separation. They can be said to provide the ‘priors’ underlying the unconscious predictions / inferences that, in turn, complete the patterns from limited sensory cues.” [The Deep History of Ourselves, p.292. Elaborated in chapters 46-48 of the book].

LeDoux goes on to say: “The idea that conscious states entail conceptual predictions based on priors (memories) related to lower-order sensory processes fits well with the theme I have been developing—that conscious experiences are higher order states that depend on memory.” [The Deep History of Ourselves, p.293].

He refers to “pattern completion:” “Schema work their magic by taking advantage of the ability of the brain to complete patterns from partial information, a process called pattern completion.” [The Deep History of Ourselves, p.231]

[14] Stephen Grossberg, “How Does Your Brain Create Your Conscious Mind?“ Psychology Today, August 17, 2021.

[15] Grossberg S. (2017). Towards solving the hard problem of consciousness: The varieties of brain resonances and the conscious experiences that they support. Neural networks : the official journal of the International Neural Network Society, 87, 38–95. https://doi.org/10.1016/j.neunet.2016.11.003

Stephen Grossberg’s almost 800 page Magnum Opus, Conscious Mind, Resonant Brain: How Each Brain Makes a Mind (New York: Oxford University Press, 2021) explains how, where in our brains, and why from a deep computational perspective, we can consciously see, hear, feel, and know things about the world, and use these conscious representations to effectively plan and act to acquire valued goals. Adaptive Resonance Theory, or ART, detailed in the book, offers a rigorously substantiated solution of the classical mind-body problem.

ART may well be the most advanced cognitive and neural theory currently that explains how humans learn to attend, recognize, and predict objects and events in a changing world that is filled with unexpected consequences.

ART, which can be derived from a thought experiment, describes how any system can learn to autonomously correct predictive errors in a changing world that is filled with unexpected events. The hypotheses on which this thought experiment is based are familiar facts that we all know about from daily life. They are familiar because they represent ubiquitous evolutionary pressures on the evolution of our brains. When a few such familiar facts are applied together, these mutual constraints lead uniquely to ART.

Nowhere during the thought experiment are the words mind or brain mentioned. The result is thus a universal class of solutions of the problem of autonomous learning and error correction in a changing world that is filled with unexpected events.

Grossberg’s CogEM (Cognitive-Emotional-Motor) model of how cognition and emotion interact is also derived from a thought experiment. CogEM explains many data about cognitive-emotional interacts.

Combining ART and CogEM leads to the results on consciousness, because they naturally emerge from an analysis of how we can quickly learn about a changing world without experiencing catastrophic forgetting. Grossberg calls this a solution of the stability-plasticity dilemma (how a brain or machine can learn quickly about new objects and events without just as quickly being forced to forget previously learned, but still useful, memories).

Taken together, these models clarify how normal brain dynamics can break down in specific and testable ways to cause behavioral symptoms of multiple mental disorders, including Alzheimer's disease, autism, amnesia, schizophrenia, PTSD, ADHD, auditory and visual agnosia and neglect, and disorders of slow-wave sleep.

Its exposition of how our brains consciously see enable the book to explain how many visual artists, including Matisse, Monet, and Seurat, as well as the Impressionists and Fauvists in general, achieved the aesthetic effects in their paintings and how humans consciously see these paintings.

The book goes beyond such purely scientific topics to discuss foundational aspects of the human condition. These results clarify how our brains support such vital human qualities as creativity, morality, and religion, and how so many people can persist in superstitious, irrational, self-defeating, and even self-punitive behaviors in certain social environments.

[16] Ogi Ogas and Sai Gaddam, Journey of the Mind: How Thinking Emerged from Chaos (New York: W. W. Norton & Company, 2022), p.237.

Ogas and Gaddam were both graduate students in the Department of Cognitive and Neural Systems at Boston University that Stephen Grossberg founded and led as chairman for 17 years. Their book Journey of the Mind is a popular science book that includes an exposition of Grossberg's original ideas, which have been so influential to them and very many others (they compare Grossberg to Newton in certain ways). Grossberg's ideas are laid out in much more detail in his Magnum Opus Conscious Mind, Resonant Brain (Footnote #15). Ogas and Gaddam attempt in Journey of the Mind to disseminate and explain Grossberg's ideas to a general readership,

They derive three “laws of consciousness” from the mathematics of Grossberg’s unified theory of mind:

1) All conscious states are resonant states

2) Only resonant states with feature-based representations can become conscious

3) Multiple resonant states can resonate together

(Feature-based representations are associated with external senses such as vision or hearing, and internal senses linked to emotion)

(Journey of the Mind, p.230)

Footnote #21 elaborates on Ogas and Gaddam's theories, which draw upon Grossberg’s framework. The concept of resonance is further explained in that footnote.

[17] Journey of the Mind, Ch. 17.

[18] Journey of the Mind, Ch. 19.

[19] Ogi Ogas and Sai Gaddam, summarizing their theory in an interview with the Next Big Idea Club propose, in summary, the following theory (this is a continuation of the quote in Footnote #12): “And finally, there’s us, the idea-thinkers—where did we come from? Object-thinking minds teamed up with one another to form a community of object minds. This network of object minds—this supermind—can think about ideas. So what form of physical connectivity bound together human brains into a supermind? Language. Language empowers the sapiens supermind to think about ideas.”

[20] Also Ginsburg and Jablonka.

[21] Ogas and Gaddam summarize Grossberg's conclusion that the specific physical activity that generates conscious experience is resonance. Resonance occurs within a module (the different types of modules are listed below) when a prediction matches the facts on the ground: when a bottom-up sensory stimulus (the facts) matches a top-down stored representation (the prediction). Resonance is the physical instantiation of subjective awareness.

Each consciousness-generating module produces its own distinctive form of subjective experience, or qualia: Grossberg has provided unifying explanations of different kinds of conscious experiences using six different kinds of resonances that he summarizes in Table 1.4 (p.42) of Conscious Mind, Resonant Brain: How Each Brain Makes a Mind:

TYPE OF RESONANCE: TYPE OF CONSCIOUSNESS

-- Surface shroud: see visual object or scene

-- Feature-category: recognise visual object or stream

-- Stream-shroud: hear auditory object or stream

-- Spectral-pitch-and-timbre: recognise auditory object or stream

-- Item list: recognise speech and language

-- Cognitive-emotional: feel emotion and know its source

In their popular science book Journey of the Mind, Ogas and Gaddam paraphrase for a more general readership the names of these resonances and the types of consciousness that they support, as follows:

-- Visual Where module: Seeing

-- Visual What module: Knowing

-- Auditory Where module: Hearing

-- Auditory What module: Knowing

-- Sequential What module: Synthesizing

-- Why module: Feeling

Expounding Grossberg's theories, Ogas and Gaddam suggest that each of these modules is independently capable of generating conscious experience through its own unique form of resonant dynamics, but multiple modules can also synchronize their dynamics to produce an integrated conscious experience. For example, you can simultaneously see and hear someone jump in a lake, resulting from synchronized resonant dynamics between the visual Where module and the Auditory Where module. Ogas and Gaddam refer to the collection of mental modules as a “consciousness cartel” (Journey of the Mind, Ch 16): "our mental economy is governed by a consciousness cartel. Each member of this cartel is a distinct type of neural resonance."

One of the most important and enigmatic forms of human mental activity is the investment of raw sensory information with private meaning, in the form of language. According to Ogas/Gaddam, our experience of symbolic meaning–such as picturing a flying nocturnal mammal when we hear the word "bat"–always involves the resonance of at least two distinct modules: the language module (which produces “synthesizing qualia,” the conscious experience of recognizing the word “bat”) and at least one other module producing qualia that is experienced as meaning (such as the visual What module producing a visual qualia of a flying mammal).

Only human minds can forge a mental link between words and meanings easily, instinctively, and incessantly, because only human minds have evolved resonant connectivity between our language module and other consciousness-generating modules. Bird minds, which lack connectivity between their birdsong module and other modules, are conscious of the notes, syllables, and phrases of birdsong–but they are not conscious of their symbolic meanings.