Artificial Intelligence

AI Constraints Can Adversely Affect Informed Decision Making

There are real ethical considerations that need to be explored.

Posted April 4, 2023 Reviewed by Abigail Fagan

Key points

- AI recommendations are often biased toward the values of the AI developers.

- This bias poses implications for if and how information is moderated or censored.

- Censorship could affect users' ability to make informed decisions.

In recent posts, I made the following arguments about artificial intelligence (AI) and human decision making:

- Humans may default to AI recommendations without understanding how valid those recommendations are.

- AI is unaware of the accuracy of its own recommendations (making it the ultimate bullsh*tter)[1].

- AI can be used to nudge decision makers toward the choice desired by the AI developer.

All of these were on display recently in a Wired UK article about Rotterdam and its welfare fraud risk system. In cases such as this, human decision makers often have no idea how valid the recommendations produced by the AI are, and yet, they are defaulting to those recommendations with a high degree of confidence. What’s worse is that many of these recommendations possess a high degree of error, which can have disastrous consequences for people receiving welfare when their benefits are taken away based on flawed recommendations.

We could easily blame the human decision makers for accepting the AI recommendation without first validating it. After all, humans don’t have to accept the AI recommendation as the default choice. They could seek more information before making a decision, treating the AI recommendation as a starting point that should be validated based on additional information before action is taken.

But what if AI is the source for that additional information? What if decision makers turn to AI to help gather evidence to inform their decisions? Could AI be trusted in that regard?

Recent evidence suggests the answer may depend on how well the views of AI developers align with those of the decision maker. Coyne (2023) provided snippets of a conversation between ChatGPT and two people. As a part of the conversation, ChatGPT informs the two human interactants that some information may be moderated to avoid information deemed to be inaccurate, harmful, or offensive. Additionally, some of the moderation may “reflect the values and perspectives of OpenAI and its team members to some extent.”

At an abstract level, most people probably don’t want an AI providing erroneous information[2], and some information is clearly erroneous (e.g., if you’re searching for a car, it would be erroneous for an AI to suggest that the average SUV gets better gas mileage than the average sedan; DOE, 2022). So there’s a clear case to be made to moderate (usually in the form of censoring) inaccurate information. But, as I argued in the past, it becomes very easy to move from moderating or censoring false information to moderating or censoring inferences or arguments that someone disagrees with.

And the problems that surface in that regard are simply compounded when we add harmful or offensive as reasons to censor information, given that both terms are subjective and difficult to operationalize in any objective way. Without an objective standard to be used here, then there would be no choice but to make the AI developers the final arbiters of moderation decisions, which is likely to lead to some degree of censorship.

That would mean inaccurate, harmful, or offensive content as determined by the AI developers could be censored. And that means that a human decision maker would be incapable of even considering the information that was censored. But which one gets to take priority? What if accurate information is deemed by one of the developers to be harmful? Would that be censored? Wouldn’t we ultimately be creating a system where a very small group of people have the power to decide which information we get to consider? Might there be some potential unintended consequences?[3]

Consider the following as an example. An AI system is developed that curates vehicle information to aid consumers in identifying options that fit specific criteria. But the developers decide that cars below a certain fuel efficiency standard are harmful to the environment. And so, searches automatically exclude cars below that standard, even if that is not a constraint the user desires. Perhaps reliability and safety are the most important criteria for this user, and a less fuel-efficient vehicle would fit those criteria (and perhaps even be rated the best for safety and reliability). Should the vehicle that fits the user-defined criteria but is inconsistent with the developers’ values be censored?

Though reasonable people may disagree, the example points toward situations where the specific values of the AI developers determine the information on which users can rely when making decisions. Such a scenario moves AI well beyond nudging. At this point, it has the potential to substantially affect human decision making by reducing (or even altering) the information available to decision makers. But since AI doesn’t actually know anything, there is plenty of potential for AI-driven censorship to lead to erroneous decisions (Mitchell, 2022).

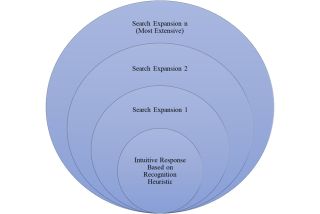

A way to conceptualize this is to think of decision making as an ongoing process (Figure 1). It begins with an intuitive response (i.e., our initial response based on our expertise/experience). If we lack sufficient expertise and/or experience to generate a conclusion with any confidence, we may begin to broaden our search to include more information as needed. Eventually, we reach a point where we are confident enough in a given decision choice to act on that decision (or not act, in some cases).

As soon as we move from the intuitive response to a broader evidence search, we create a scenario where manipulation of that evidence or how it is organized can affect the conclusions we reach. If AI determines how some evidence is prioritized, how it is framed for the end user, or even whether that evidence is available, then we have given AI the power to affect people’s conscious decisions. And if the criteria for those decisions are outside the user’s control, then that means the AI developers get to decide. And that means it is the developers’ values, not the end users’, that will guide that evidence search.

All of this suggests that there are very real ethical issues that need to be addressed, but that hasn’t stopped Microsoft, Google, and others from embedding their proprietary AIs into tools intended to help people make informed decisions. There are certainly places where AI can add value, especially when it comes to constrained decisions that possess clear boundaries (e.g., as in Google Maps or a software intended to pool known information together to synthesize it). As decisions become more complex (how to address climate change) or the potential data on which to base reasonable decisions could vary tremendously based on the decision maker’s values (e.g., which car to buy, what stocks to invest in), the more likely it is AI will have the capacity to steer decision making in ways that conform to the AI developers’ biases and values and not those of the user. And that could lead to unintended consequences, some likely trivial but others more consequential.

References

Footnotes

[1] Even the CEO behind ChatGPT cautions that AI is prone to misinformation (Ordonez et al., 2023).

[2] Such as we’ve seen with AI hallucinations

[3] There are definitely issues to consider, such as the potential to manufacture truth or to create the appearance of consensus where none exists.