Confidence

The Hidden Dangers of Expert Overconfidence

Experts can be very confident, even when they’re wrong.

Posted April 1, 2024 Reviewed by Devon Frye

Key points

- When experts venture outside their expertise bubble, their expertise may be questionable.

- A recent study suggests experts are more confident when they're right than wrong.

- But, they show higher confidence than nonexperts, even when they are wrong.

- It may be difficult to differentiate the quality of expertise based strictly on expert confidence.

Early in my time writing for Psychology Today, I wrote about the expertise bubble, a topic that fit well with what was going on in the world (I wrote the piece in May 2020). One of the points I made in that piece was this:

"When experts venture into topical areas farther removed from their prior experience, they are more likely extending their expertise bubble into questionable domains. This is not necessarily a problem, unless the expert is also demonstrating a high level of confidence in the claims s/he is making. In such cases, further validation of the claims may be required." (italics added)

What remained unclear at the time, though, was whether experts necessarily recognized when they were straying from their expertise bubble in a way that could result in erroneous conclusions. Some recent evidence produced by Han and Dunning (2024) suggests the answer is yes—but with some caveats.

The Basics of the Research

Han and Dunning conducted three studies: one with climate scientists, one with psychologists, and one with investors. In each study, experts were chosen based on their credentials. They were then provided a set of closed-ended questions (either true/false or multiple choice [1]) and were asked to rate their confidence in each response. [2]

But they didn’t stop there. They also included a comparison sample of nonexperts for each of those studies. They compared the experts and nonexperts in terms of their performance, overall confidence, and—most importantly—the difference in confidence estimates for correct and incorrect answers.

The Results

Unsurprisingly, across all three studies, experts outperformed nonexperts on the test questions, with the differences being much larger in the climate science and investor studies than in the psychologist study. [3] Experts tended to be more confident in their correct answers than in their incorrect answers, while nonexperts tended to demonstrate similar levels of confidence whether their answers were correct or not.

Across the board, though, experts demonstrated higher overall confidence in their responses than nonexperts. But this higher confidence was observed for both correct and incorrect answers. In other words, experts were more confident than nonexperts, both when they were correct and when they were incorrect. In fact, experts tended to be equally or more confident in their incorrect answers than nonexperts were in their correct answers.

Putting the Results Into Context

It goes without saying that a closed-ended set of questions is a very narrow way of assessing the correspondence between expertise and accuracy. But putting that aside for a moment, the results suggest that experts can at least somewhat differentiate between when they are operating inside their expertise bubble and when they are straying from it. [4] The fact that expert confidence varied significantly between correct and incorrect answers suggests experts at least have a sense of when they are less certain about the insight provided by their expertise.

Even when they were wrong, though, experts were still more confident than they should have been in their response. Some of this, as Han and Dunning argue, is explainable by a system that “favors assertive knowledge.” In other words, experts navigate a world where they are expected to have the answer and to demonstrate confidence in that answer.

There is little incentive for experts to develop a sense of humility that could lead to doubt, especially when an expert who lacks confidence is unlikely to be an improvement over an expert who has too much of it. So even when they are less certain, they may still demonstrate high confidence in their assertions.

As such, there is a tension to manage. A deficiency of confidence is likely to hinder an expert’s performance. But an overabundance of it is also likely to lead to erroneous conclusions. And if experts are too confident in their conclusions, it can motivate them to seek out information that merely confirms them (also called motivated reasoning), even if there is strong contradictory evidence.

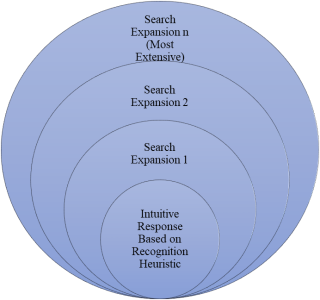

Hence, some skepticism of experts’ own confidence seems warranted. Although experts are more likely to produce valid intuitive responses when confronted with a situation where their expertise applies (as in Figure 1 [5]), if they find themselves seldom willing to expand beyond their intuition, they may develop a pattern of overconfidence. And that pattern of overconfidence could lead to disastrous outcomes.

Consumers of expert opinions on various topics should also recognize that when an expert appears confident, that confidence does not necessarily equate to insight or wisdom. Experts will tend to be more confident than nonexperts, but if, as this study suggests, experts tend to be more confident than nonexperts even when they’re wrong, it may be difficult to tell when experts have an appropriate level of confidence and when they have strayed into the territory of overconfidence. It’s unknown whether consumers could reliably differentiate the two conditions, but that could be worth further study.

References

Footnotes

[1] The climate scientists and psychologists received true/false questions, while the investors were provided multiple choice questions.

[2] There were some idiosyncratic elements present in each of the studies as well, but you can dive more deeply into those by reading the research article.

[3] Psychologists only performed 9 percentage points higher than nonexperts, which was much lower than for the climate scientist (25 percentage point difference) and investor (18 percentage point different) studies. I will not opine on that difference here, though, as it is off topic.

[4] Han and Dunning argue that is not the case, but I would disagree. The fact that expert confidence varied significantly between correct and incorrect answers suggests experts at least have a sense of when they are less certain.