Neuroscience

How the Brain Resolves Ambiguity

New research sheds light on how the brain categorizes ambiguous visual images.

Posted May 31, 2020 Reviewed by Gary Drevitch

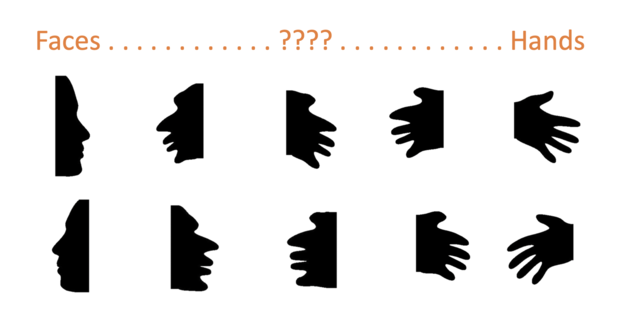

Take a look at the image below...

Is it a face or a hand?

Neither?

A little of both?

By using ambiguous 2D images such as the one above, researchers at Stanford, UC Santa Cruz, and UC Berkeley recently investigated the mechanisms by which the brain resolves visual ambiguity.

The categorical visual brain

How the brain categorizes visual information is a central question in human neuroscience. Ever since the seminal 1997 paper by Nancy Kanwisher and colleagues that identified the human fusiform face area (FFA - or mFus-faces and pFus-faces) that responds selectively to faces, neuroscientists have searched the brain looking for other functionally selective areas. We now know, for example, that there is a region — the parahippocampal place area, or PPA — that responds preferentially to places (e.g., buildings, indoor and outdoor scenes); another region in the lateral occipital cortex (LO) that activates more when we look at objects compared to random textures; another region — the visual word form area (or VWFA) — that activates to written language and symbols; and yet another — the extrastriate body area (EBA or OTS-limbs) — that responds when we look at bodies or body parts (but not faces).

These findings have led to a popular view that the brain represents visual categories in distinct modules, where one module is solely responsible for representing faces, another for representing cars, another for dogs, another for chairs, etc.

Distributed representations

An alternative view is that category representations are much more distributed across the visual brain. In another landmark paper in 2001, Jim Haxby and colleagues analyzed patterns of brain responses to several visual categories (faces, houses, cats, shoes, etc.). Then they trained an algorithm to classify brain activity patterns corresponding to each category. When presented with a new, unclassified brain response pattern, the algorithm was able to predict the correct category the participant was looking at with remarkable accuracy. Furthermore, the algorithm could predict the correct category even without considering activity in brain regions that respond maximally to that category. For example, the algorithm could predict that a participant was looking at faces using only responses in brain regions that did not respond preferentially to faces.

The visual categories used in most previous studies, however, were always clear-cut. In real life we are often presented with noisy information and that creates ambiguity. How does the brain respond when presented with an ambiguous stimulus that could belong to two different categories?

Resolving visual ambiguity

It can be difficult to create ambiguous images that straddle between categories, because categories like faces, buildings, and bodies differ in so many ways. But by using simple, shape-based silhouettes, researchers at Stanford, UC Santa Cruz, and UC Berkeley were able to create ambiguous, cross-category images that morphed between faces on one end, to hands on the other end:

In their findings, just published in the journal Cerebral Cortex, Mona Rosenke and colleagues used functional magnetic resonance imaging (fMRI) to examine brain responses while participants observed images like the ones above. In some trials, the images were clearly faces (like the items on the far left of the figure above), and accordingly, the brain showed large responses in the face-selective mFus-faces and pFus-faces, and small responses in other regions. In other trials, the images were clearly hands (like the items on the far right of the figure), which was associated with large responses in the body-selective OTS-limbs area, and small responses elsewhere. Of course, the researchers were most interested what happens in the intermediate cases.

It turned out that across the 14 study participants, there was a quite a range of responses to the intermediate stimuli like the items in the middle column of the figure. For some participants, these ambiguous stimuli elicited brain responses that resembled those to faces. For others, the brain activity patterns looked more like responses to hands.

Outside of the fMRI scanner, the same 14 participants were then asked to categorize each of the images they had seen in the scanner as either a face or a hand. Just like their brain responses, categorizations varied across participants, with some endorsing the intermediate stimuli as faces and others as hands.

Finally, the researchers compared the brain and behavioral responses, and tested whether they could predict how a given participant would categorize an ambiguous stimulus (as a face or hand) based on their brain response patterns. Adopting Haxby's distributed encoding approach, they considered responses across multiple brain regions, specifically subtracting responses in body-selective OTS-limbs from responses in face-selective mFus-faces and pFus-faces. They then correlated this subtracted brain measure with participants’ categorizations, and found that they could reliably predict how a given participant would categorize an ambiguous stimulus based on their brain responses alone.

While there is still much to learn about how the brain represents category information, this study provides an important clue: When confronted with ambiguous information, the brain may resort to comparing and contrasting responses across different functionally selective regions to determine which way to break the tie.

References

Kanwisher, N., McDermott, J., & Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of neuroscience, 17(11), 4302-4311.

Haxby, J. V., Gobbini, M. I., Furey, M. L., Ishai, A., Schouten, J. L., & Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science, 293(5539), 2425-2430.

Davidenko, N. (2007). Silhouetted face profiles: A new methodology for face perception research. Journal of Vision, 7(4), 6-6.

Rosenke, M., Davidenko, N., Grill-Spector, K., Weiner, K.S. (2020). Combined Neural Tuning in Human Ventral Temporal Cortex Resolves the Perceptual Ambiguity of Morphed 2D Images. Cerebral Cortex, bhaa081, https://doi.org/10.1093/cercor/bhaa081.